LM are probability distribution over sequences of tokens - which are words in a vocabulary and so the sequence is a phrase. Each phrase fragment gets a probability. The probability is higher for fragments which are good phrases. Good meaning grammatically correct and semantically plausible. Good is very dependent on extra information that is not in the training set. For example, a sentence “I saw the golden gate bridge flying into san francisco” needs some contextual information like bridges are stationary and “I” refers to a human in the plane. This means the LM needs to determine semantic plausibility of a phrase fragment. This is what makes LMs deceptively simple and easy to get wrong.

Mathematically, the phrase fragment is called sequence of tokens. And the tokens are pulled from a set “V” for vocabulary of the language. Probability distribution of a four token sequence assigns different probabilities to the ordering of the four tokens in that sequence. Some orderings are semantically implausible and others are syntactically incorrect. So far, we haven’t done any generation, but that is also possible in the LM, where given a sequence of tokens, say four, we can pull five token sequences and judge on its “goodness”. The judgement is based on some parameter which could be level starting from poor to best. To continue on the sentence above, the probabilities of “I saw .. sf on saturday”, “I saw ..sf yesterday” are all good as they are good sentences, but we need a new parameter to pick one over the other. This parameter is the randomness of search. If we are strict, we would pick the highest next probability of each word in the sentence and its next prediction. If we are lenient, we would randomize the next pick. This conditional generation is then determined by the prefix sequence, in our case “I saw .. sf” and the completion will be “yesterday”. This is the big problem with the LM, a lenient generation will create absurd sentences and even total fabrications and strict generation will create templates. Add to that there is a heavy reliance on the prefix sequence or “prompt”. This parameter is called temperature and ranges from lenient to strict and controls the variability of the generation. So, we now have the mathematical vocabulary of generation. We have a prompt, a completion and temperature.

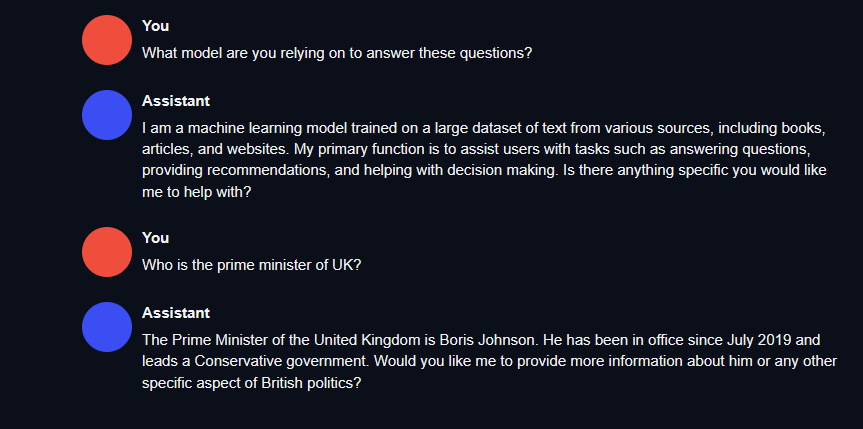

A slight change to the prompt will generate a entirely different sentence. A longer prompt also changes the generation and any foreign words in the prompt, perfectly normal is spoken english, can also influence the generation in unpredictable ways. This length of the number of tokens in the prompt is a big determinate of computational complexity of LMs. A generation that uses all the tokens, such as RNN (Recurrent Neural Network) will take a very long time to train. A transformer which sets attention to a few prior tokens and exploits parallelism of GPUs limits the accuracy of the generation. Parallelism of transformers enable large LMs. Large as in trillion parameters. What was observed in later 2022 was the larger the model got, the fixed length generation coupled with temperature generated sentences which never existed before. This is called emergent behavior - this is beginning of “learning”. Repeated prompts on the same topic teach the LM to hone in on the context. This makes the completions sound more like spoken language and less like an automaton. This observation of emergent behavior without having to change the model (like no new gradient descents) is what is causing most the hype around Generative AI. As the model hones into a context, it feels like a turing machine where the generation feels conversational.

The biggest challenge for Gen AI - as an industry - is not to create larger and larger models, but instead to slice and dice an existing LLM into a size that can be deployed at scale. We can see the sizes of large model in this paper, reproduced below. It is rumored GPT-4 is over 1 trillion parameters as well. Training costs around $28K per 1 billion parameters. It is however a one-time cost. The continual cost is on the sliced/diced version - perhaps running on the phone - which needs to cost no more a penny per 1000 generations.

The models also need to be trained on proprietary and current data for them to generate economic value beyond research.